SoC Design Methodology for Exascale Computing Shekhar Borkar

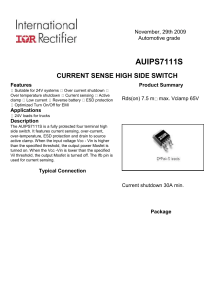

реклама

SoC Design Methodology for Exascale Computing Shekhar Borkar Intel Corp. June 7, 2015 This research was, in part, funded by the U.S. Government, DOE and DARPA. The views and conclusions contained in this document are those of the authors and should not be interpreted as representing the official policies, either expressed or implied, of the U.S. Government. 1 Outline Exascale motivation & challenges Future Exascale straw-man system design – Compute – Memory – Interconnect Design challenges and solutions Choice of process technology SoC Challenges 2 2 From Giga to Exa, via Tera & Peta 1.5x from transistor 670x from parallelism 1000 Exa Relative Transistor Perf Peta 100 8x from transistor 128x from parallelism Tera 10 Giga 1 1986 32x from transistor 32x from parallelism 1996 2006 2016 System performance from parallelism 3 3 Where is the Energy Consumed? Teraflop system today 600W 10TB disk @ 1TB/disk @10W ~1KW Disk Com 4 Decode and control Address translations… Power supply losses Bloated with inefficient architectural features 100W 100W Memory 150W Compute 50W Goal 100pJ com per FLOP 5W 0.1B/FLOP @ ~3W 1.5nJ per Byte ~5W 2W 50pJ per FLOP 5W ~20W 4 The UHPC* Challenge *DARPA, Ubiquitous HPC Program 20W, Tera 20MW, Exa 20KW, Peta 20 pJ/Operation 20 mW, Mega 5 2W, 100 Giga 20 mW, Giga 5 Top Exascale Challenges 6 1. System Power & Energy 2. New, efficient, memory subsystem 3. Extreme parallelism, data locality, gentle-slope programmability 4. New execution model comprehending self awareness with introspection 5. Resiliency to provide system reliability 6. System efficiency & cost 6 Exascale HW Design Challenges 1. NTV Logic & Memory for low energy 4. Instrumentation for introspection 101 102 1 101 10-1 0.4 0.6 0.8 1.0 1.2 101 350 300 250 200 150 100 50 320mV 1 0.2 65nm CMOS, 50°C 400 10-2 1.4 1 9.6X Active Leakage Power (mW) 103 450 Subthreshold Region 102 65nm CMOS, 50°C Total Power (mW) Energy Efficiency (GOPS/Watt) Maximum Frequency (MHz) 104 10-1 Energy measurement Performance feedback Ambient conditions Break the Vmin barrier FPU, logic & latches Register Files, SRAM 320mV 0 0.2 0.4 0.6 0.8 1.0 1.2 10-2 1.4 Supply Voltage (V) Supply Voltage (V) 2. Coping with variations due to NTV 1.0 60% +/- 5% Variation in Vdd or Vt 50% 0.8 Freq (Relative) 5. Hierarchical, heterogeneous IC Spread 40% 0.6 30% 0.4 20% 0.2 10% Frequency 0.0 0% 0.0 0.2 0.4 0.6 0.8 1.0 C Freq degradation Variation in performance Functionality loss C C Bus C C C R Bus C C C R Bus Bus to connect over short distances C C R Bus C C C C 2nd Level Bus C C C C R Bus C Busses X Bars Circuit & Packet Switched Vdd (Relative) 3. Fine grain power management of Busses OrHierarchy hierarchical circuit and packet switched networks 6. Overhaul memory subsystem Traditional DRAM Page Page Addr Page Page Page Page Addr CAS 7 RAS Voltage Regulator Buck or Switched Cap Power gating Frequency control New DRAM architecture Activates many pages Lots of reads and writes (refresh) Small amount of read data is used Requires small number of pins Activates few pages Read and write (refresh) what is needed All read data is used Requires large number of IO’s (3D) 7 Exascale SW Challenges 1. Extreme parallelism O(billion) Exa 1.E+08 Peta 1.E+06 1.E+04 2.5M X 4,000X Concurrency Tera 36X 1.E+02 G Transistor Performance 1.E+00 1986 1996 2006 2016 Programming model Data locality Legacy compatibility 2. Programming model & system Dependencies Resources Events Code for task Local data for task (Can use global data from anywhere in system) Data flow inspired Gentle slope programming (Productivity to high performance) 3. New execution model Running Q Ready Q Waiting Deps 8 A codelet Event driven Asynchronous Dynamic scheduling Runtime system 4. Self awareness Action Observation based Monitor, continuously adapt Objective function based runtime optimization 5. Challenge applications SAR Graph Decision Shock Mol Dyn New algorithms Locality friendly Immunity to tapered BW 6. System level resiliency research Applications System Software Programming system Microcode, Platform Microarchitecture Circuit & Design Error detection Fault isolation Fault confinement Reconfiguration Recovery & Adapt 8 Exascale SoC Processor Straw-man Core D$ (64KB) I$ Cluster Block Simple Core Large shared Cache/Mem RF Large shared Cache/Mem Dec FPMAC SoC SoC Targets (5 nm) Large shared Cache/Mem Mem Controller 9 Interconnect Fabric Interconnect Fabric IO (PCIe) Die Size mm ~25 Frequency GHz >2 Cores ~4096 FP Perf Tera-Flops >16 Power Watts Energy Efficiency pJ/FLOP 4-6 GF/Watt > 200 < 100 9 Node High performance, low power ~1 TB DDRx LPD LPD DR 3D DR ...... LPD LPD DR 3D DR ...... 1 TB/s Total BW DDRx ...... High capacity, low cost 4 TB SoC DDRx DDRx ...... IC Fabric Processor Node 10 10 Interconnect Switch Straw-man 11 Switching Fabric Arbitration Logic Data Buffer Electrical PHY Data Buffer Electrical PHY Optical PHY Data Buffer Electrical PHY Arbitration Logic Arbitration Logic Arbitration Logic Electrical PHY Data Buffer Electrical PHY Data Buffer Optical PHY Electrical PHY Data Buffer Data Buffer Electrical PHY Electrical PHY Data Buffer Optical PHY Local Electrical Switch Network Optical PHY 11 On-die Data Movement vs Compute 1.2 1 Compute energy 0.8 0.6 On die IC energy/mm 60% 0.4 0.2 6X 0 90 Source: Intel 65 45 32 22 14 Technology (nm) 10 7 Interconnect energy (per mm) reduces slower than compute On-die data movement energy will start to dominate 12 12 Interconnect vs Switch Energy 10000 1 Repeated wire delay Wire energy 0.75 Switch delay Delay (ps) pJ/Bit Switch energy 1000 0.5 Switch energy With scaling 0.25 100 0 0 5 10 15 20 25 On-die interconnect length (mm) 13 13 Interconnect Structures Buses over short distance Shared bus 1 to 10 fJ/bit 0 to 5mm Limited scalability Packet Switched Network Shared memory Cross Bar Switch X-Bar Multi-ported Memory 0.1 to 1pJ/bit 2 to 10mm Moderate scalability 10 to 100 fJ/bit 1 to 5mm Limited scalability Board System Cabinet Second Level Switch .. . First level Switch .. . .. . .. . 14 1 to 3pJ/bit >5 mm, scalable Cabinet Cluster ………………………… Switch Cluster ………………………… .. . Hierarchy & Heterogeneity ………………………… 14 Exascale HW System 15 15 Data Movement Power (MW) Bandwidth Tapering 1.E+02 8 Byte/Flop total 1.E+01 24 L1 1.13 1.E-01 1.E-02 L2 Chip Board Cab Sys Naïve, 4X 0.19 0.03 Severe 0.0045 1.E-03 1.E-05 Core L1 L2 Data Movement Power (MW) 0.0005 1.E-04 1.5 Total DM Power = 3 MW 1 0.00005 0.5 Chip Board Cab L1SysL2Sys 0 L1 16 Total DM Power = 217 MW 6.65 1.E+00 Byte/FLOP 1000 100 10 1 0.1 L2 Chip Board Cab Intelligent BW tapering is necessary Sys 16 NTV Operation for Energy Efficiency 10X increase in Energy Efficiency Leakage power dominates Variations become worse Die to die frequency Across temperature H. Kaul et al, 16.6: A 320mV 56μW 411GOPS/Watt Ultra-Low-Voltage MotionEstimation Accelerator in 65nm CMOS ISSCC 2008 H. Kaul et al, “Near-Threshold Voltage (NTV) Design—Opportunities and Challenges”, DAC, 2012 17 17 NTV Design Considerations (1) wrwl bitx -σ bit +σ contention wrbl rdbl wrbl# rdwl Conventional dual-ended write RF cell NTV friendly Flip-Flop wrwl “0” bitx bit “1” 18 two paths write “0” two paths write “1” wrbl rdbl wrbl# wrwl# rdwl NTV friendly, dual-ended transmission gate RF cell NTV friendly Vector FlipFlop H. Kaul et al, “Near-Threshold Voltage (NTV) Design—Opportunities and Challenges”, DAC, 2012 18 NTV Design Considerations (2) NTV friendly multiplexer Ultra-low voltage, split-output level shifter Two-stage, cascaded split-output level shifter 19 19 Integration of IP Blocks Vdd Power Enable# Integrated Voltage Regulator & Control Clock Enable L Vout Power Enable Synthesizing clock gating Integrated voltage regulators Synthesizing power gating Enable DVFS and NTV operation Other traditional IP blocks: Accelerators, Memory controller, PCIe, …etc. 20 20 Choice of Technology 22 nm SoC Technology Freq (Relative) SD Leakage Power (Relative) 10.0000 1.0 30% drop 0.8 1.0000 0.6 0.1000 0.4 0.0100 0.2 0.0010 0.0 0.0001 HP Std LP ULP 100X drop HP Std LP ULP C.-H. Jan et al, “A 22nm SoC Platform Technology Featuring 3-D Tri-Gate and High-k/Metal Gate, Optimized for Ultra-LowPower, High-Performance and High-Density SoC Applications”, IEDM 2012 21 21 SoC Design Challenges Challenge 22 Potential Solutions 1,000’s or cores Large die WD & DD Variations Variation tolerance Dynamic, system level adaptation Voltage scaling to NTV New standard cell library? Data movement dominates Interconnect optimization Multi-ported, various sizes of RF and memories Memory and RF compilers Integration of custom and offthe-shelf IP blocks Soft IP, not hard IP Complexity Formal verification Silicon cost Architectural simplicity Design for system efficiency System level optimization tools Process technology HP vs LP—judicious choice 22