Presentation 20 Dec 2015 Dialogue systems

реклама

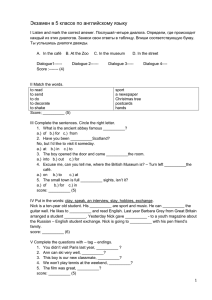

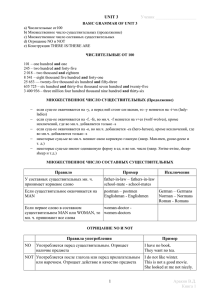

Dialogue Systems End-to-End Review Vladislav Belyaev DeepHackLab 20 December 2015 Agenda 1. Dialogue System – objectives and problems 2. Architectures 2.1. Char-rnn 2.2. Sequence-to-sequence (seq2seq) 2.3. Hierarchical recurrent encoder-decoder (HRED) 2.4. Attention with Intention (AWI) 2.5. Memory Networks 3. Summary Dialogue System A.M. Turing. Computing machinery and intelligence. Mind, pages 433–460, 1950 Dialogue System Goal driven and Non-goal driven Deterministic (rule based, IR) and Generative Large dialogue corpora (0.1 or 1 billions of words) Natural and Unnatural + can depend on external unobserved events Preprocessing Segmentation Evaluation metric/Problems Remove anomalies (acronyms, slang, misspellings and phonemicization) Tokenization, stemming and lemmatization Speaker segmentation (diarisation) How to measure sense? Conversation segmentation Serban J.V. et al. (2015), A Survey of Available Corpora For Building Data-Driven Dialogue Systems Generalization to previously unseen situations Highly generic responses Diversity of corpora Dialogue System Evaluation metrics Goal-related performance criteria (including user simulator) Non-goal “Naturalness” – human evaluation (+) BLUE/METEOR score (-) Next utterance classification (+) Word perplexity (+) Response diversity (in comb) A.M. Turing. Computing machinery and intelligence. Mind, pages 433–460, 1950 Serban J.V. et al. (2015), A Survey of Available Corpora For Building Data-Driven Dialogue Systems Dialogue System - Business Without neural nets! http://www.nextit.com/case-studies/amtrak/ http://www.nextit.com/case-studies/alaska-airlines/ Dialogue System - Business Development costs (hand-crafted features) РАЗРАБОТКА НА ОСНОВЕ ЛИНГВИСТИЧЕСКИХ ПРАВИЛ Начало разработки системы Обучение нейросети Высокая длительность разработки (3-5 месяцев) Ввод в эксплуатацию + Domain limitations Высокая стоимость Создание базы знаний Тестирование Ввод в эксплуатацию Быстрая разработка (2-3 недели) Низкая стоимость Тестирование НЕЙРОСЕТЕВАЯ Architectures Opportunities Data Size Papers Code Char-rnn Suits well for morphologically rich languages Memory limited by RAM + Hard to remember facts Around several 10Ms of words is better http://karpathy.github.io/2015/ 05/21/rnn-effectiveness/ http://arxiv.org/abs/1508.06615 https://github.com/karpathy/charrnn https://github.com/yoonkim/lstmchar-cnn Seq2seq Don’t contain dialogue states No really long-term dependencies (tricky play) For NCM Help desk – 30M of tokens OpenSubtitles – 923M of tokens http://arxiv.org/abs/1506.05869 https://www.tensorflow.org/versions /master/tutorials/seq2seq/index.htm l#sequence-to-sequence-models https://github.com/macournoyer/ne uralconvo HRED Has a dialogue state representation Hard to remember facts >1B of tokens recommended http://arxiv.org/abs/1507.04808 http://arxiv.org/abs/1507.02221 https://github.com/julianser/hed-dlg https://github.com/julianser/hed-dlgtruncated AWI Amazing results (a dialogue state and attention), but no code and in-house dataset 4.5M of tokens (1000 dialogues) http://arxiv.org/abs/1510.08565 - MemNN Can perform QA, recommendation and chitchat http://fb.ai/babi 3.5M training examples (4 ds for 4 tasks) http://arxiv.org/abs/1511.06931 https://github.com/carpedm20/Mem N2N-tensorflow https://github.com/facebook/SCRNN s Char-rnn http://karpathy.github.io/2015/05/21/rnn-effectiveness/ Y. Kim et al. (2015), Character-Aware Neural Language Models Sequence-to-sequence 4 LSTM layers with 1000 memory cells (1 layer = +10% perp) Input vocabulary 160k words Output vocab 80k words 1000 for word embeddings (for 300M words) Grouping sentences by length (5.8 perp) Reversing sentences (4.7 perp) Ilya Sutskever, Oriol Vinyals, Quoc V. Le (2014), Sequence to Sequence Learning with Neural Networks Sequence-to-sequence Ilya Sutskever, Oriol Vinyals, Quoc V. Le (2014), Sequence to Sequence Learning with Neural Networks https://www.tensorflow.org/versions/master/tutorials/seq2seq/index.html#sequence-to-sequence-models http://googleresearch.blogspot.ca/2015/11/computer-respond-to-this-email.html Sequence-to-sequence A single layer LSTM with 1024 memory cells The vocabulary consists of the most common 20K words 30M of tokens Model achieved a perplexity of 8, An n-grammodel achieved 18 2 layers LSTM with 4096 memory cells + 2048 linear units The vocabulary consists of the most common frequent 100K words 923M of tokens Model achieved a perplexity of 17, An 5-grammodel achieved 28 Oriol Vinyals, Quoc V. Le (2015), A Neural Conversational Model Hierarchical recurrent encoder-decoder Iulian V. Serban, et al. (2015), Building End-To-End Dialogue Systems Using Generative Hierarchical Neural Network Models Hierarchical recurrent encoder-decoder For the baseline RNN, we tested hidden state spaces dh = 200, 300 and 400. For HRED we experimented with encoder and decoder hidden state spaces of size 200, 300 and 400. Based on preliminary results and due to GPU memory limitations, we limited ourselves to size 300 when not bootstrapping or bootstrapping from Word2Vec, and to size 400 when bootstrapping from SubTle. Preliminary experiments showed that the context RNN state space at and above 300 performed similarly, so we fixed it at 300 when not bootstrapping or bootstrapping from Word2Vec, and to 1200 when bootstrapping from SubTle. For all models, we used word embedding of size 400 when bootstrapping from SubTle and of size 300 otherwise Iulian V. Serban, et al. (2015), Building End-To-End Dialogue Systems Using Generative Hierarchical Neural Network Models Hierarchical recurrent encoder-decoder # Gradients will be truncated after 80 steps. This seems like a fair start. state['max_grad_steps'] = 80 2015-12-20 11:37:04,223: search: DEBUG: adding sentence [16, 306, 9294, 17, 15421, 10, 1] from beam 0 state['qdim_encoder'] = 3000 2015-12-20 11:37:04,223: search: DEBUG: partial -> боже , нет . нет , мама state['qdim_decoder'] = 3000 2015-12-20 11:37:04,223: search: DEBUG: partial -> - вот гений ! - рад это # Dimensionality of dialogue hidden layer 2015-12-20 11:37:04,223: search: DEBUG: partial -> конечно , он твой приятель один . 2015-12-20 11:37:04,223: search: INFO: RandomSampler : sampling step 7, beams alive 3 state['sdim'] = 1000 2015-12-20 11:37:04,330: search: DEBUG: partial -> боже , нет . нет , мама . # Dimensionality of low-rank approximation 2015-12-20 11:37:04,330: search: DEBUG: partial -> - вот гений ! - рад это слышать 2015-12-20 11:37:04,330: search: DEBUG: partial -> конечно , он твой приятель один . он state['rankdim'] = 1000 30k vocab, 77M words, perp 39.0644 2015-12-20 11:37:04,330: search: INFO: RandomSampler : sampling step 8, beams alive 3 2015-12-20 11:37:04,437: search: DEBUG: adding sentence [212, 11, 35, 10, 35, 11, 211, 10, 1] from beam 0 2015-12-20 11:37:04,437: search: DEBUG: partial -> - вот гений ! - рад это слышать . 2015-12-20 11:37:04,437: search: DEBUG: partial -> конечно , он твой приятель один . он тоже 2015-12-20 11:37:04,437: search: INFO: RandomSampler : sampling step 9, beams alive 2 2015-12-20 11:37:04,534: search: DEBUG: adding sentence [18, 77, 3610, 22, 18, 458, 20, 1217, 10, 1] from beam 0 2015-12-20 11:37:04,535: search: DEBUG: partial -> конечно , он твой приятель один . он тоже там 2015-12-20 11:37:04,535: search: INFO: RandomSampler : sampling step 10, beams alive 1 2015-12-20 11:37:04,624: search: DEBUG: partial -> конечно , он твой приятель один . он тоже там был 2015-12-20 11:37:04,624: search: INFO: RandomSampler : sampling step 11, beams alive 1 2015-12-20 11:37:04,712: search: DEBUG: partial -> конечно , он твой приятель один . он тоже там был . 2015-12-20 11:37:04,713: search: INFO: RandomSampler : sampling step 12, beams alive 1 2015-12-20 11:37:04,801: search: DEBUG: adding sentence [139, 11, 25, 162, 846, 151, 10, 25, 119, 98, 62, 10, 1] from beam 0 2.68409920732: боже , нет . нет , мама . 3.10118466318: - вот гений ! - рад это слышать . 3.28937990442: и убил тебя . 3.72474860085: конечно , он твой приятель один . он тоже там был . 5.60221603406: ты дом налоги и болтаешь . Hierarchical recurrent encoder-decoder # Gradients will be truncated after 80 steps. This seems like a fair start. state['max_grad_steps'] = 80 User – почему ты думаешь, что ИИ безопасен? state['qdim_encoder'] = 3000 state['qdim_decoder'] = 3000 AI - потому что это правда . # Dimensionality of dialogue hidden layer User – а кто еще знает об этом? state['sdim'] = 1000 AI - она не в курсе . # Dimensionality of low-rank approximation User – кто она? state['rankdim'] = 1000 30k vocab, 77M words, perp 39.0644 AI - я - ваша дочь . User – у меня нет детей! AI - ладно . User – и вообще, надо бы отключить питание за такие ответы. AI - боже , нет . нет , мама . User – ок, значит ИИ безопасен, да? AI - как я и говорил ... User – а чем ты еще занят? AI - множество забот , ваша честь . User – погоди ка, а куда делась дочь? AI - нет , он просто хочет , чтобы ей стало лучше . User – кто он? сколько вас там? AI - но она здесь . Attention with Intention 4.5M of tokens (1000 dialogues) Kaisheng Yao et al. (2015), Attention with Intention for a Neural Network Conversation Model AWI Kaisheng Yao et al. (2015), Attention with Intention for a Neural Network Conversation Model Memory Networks With additional modifications to construct both long-term and shortterm context memories Sukhbaatar et al. (2015), End-To-End Memory Networks J. Dodge et al. (2015), Evaluating prerequisite qualities for learning end-to-end dialog systems Memory Networks J. Dodge et al. (2015), Evaluating prerequisite qualities for learning end-to-end dialog systems Memory Networks J. Dodge et al. (2015), Evaluating prerequisite qualities for learning end-to-end dialog systems Summary Our vision (DeepHackLab + NN&DL LAB MIPT) Large corpora (natural and unnatural) Pre-processing module Where to find? Russian or not? Russian… How many words are enough? Do we need words? What is the best representation? End-to-end module Architecture? Natural language generation The best suitable architecture? Dialogue policy learning Reinforcement learning? Dialogue state tracking What is the best representation? Q&A Knowledge module Architecture? How to put all together? Evaluation module How to measure sense? How much is enough? T. Mikolov et al. (2015), A Roadmap towards Machine Intelligence J. Schmidhuber (2015), On Learning to Think: Algorithmic Information Theory for Novel Combinations of Reinforcement Learning Controllers and Recurrent Neural World Models http://qa.deephack.me/ Thank you for attention! Questions? Bibliography Key refs 1. Serban J.V. et al. (2015), A Survey of Available Corpora For Building Data-Driven Dialogue Systems http://arxiv.org/abs/1512.05742 2. Y. Kim et al. (2015), Character-Aware Neural Language Models http://arxiv.org/abs/1508.06615 3. Ilya Sutskever, Oriol Vinyals, Quoc V. Le (2014), Sequence to Sequence Learning with Neural Networks http://arxiv.org/abs/1409.3215 4. Oriol Vinyals, Quoc V. Le (2015), A Neural Conversational Model http://arxiv.org/abs/1506.05869 5. Iulian V. Serban, et al. (2015), Building End-To-End Dialogue Systems Using Generative Hierarchical Neural Network Models http://arxiv.org/abs/1507.04808 6. Kaisheng Yao et al. (2015), Attention with Intention for a Neural Network Conversation Model http://arxiv.org/abs/1511.06931 7. J. Dodge et al. (2015), Evaluating prerequisite qualities for learning end-to-end dialog systems http://arxiv.org/abs/1511.06931 8. T. Mikolov et al. (2015), A Roadmap towards Machine Intelligence http://arxiv.org/abs/1511.08130 9. J. Schmidhuber (2015), On Learning to Think: Algorithmic Information Theory for Novel Combinations of Reinforcement Learning Controllers and Recurrent Neural World Models http://arxiv.org/abs/1511.09249